PsyNet: the online human behavior lab of the future

A scalable, open platform for high-powered, interactive, and cross-cultural behavioral experiments.

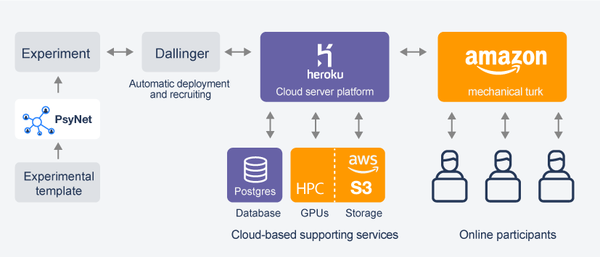

PsyNet is an open, scalable experimental platform for running high-powered online behavioral studies—including large-scale interactive experiments that are difficult or impossible to run in traditional laboratory settings. It supports experiments with rich timelines, adaptive algorithms, and real-time interaction between participants, while also providing tooling for recruitment, monitoring, and data management.

Over the past decade, online participant pools have enabled new classes of behavioral research: large social networks, cultural transmission, governance decisions, and high-dimensional perceptual modeling. PsyNet was designed to make these studies reusable, auditable, and easier to deploy, while maintaining strong standards around privacy, ethics, and reproducibility.

PsyNet builds on the open-source Dallinger framework and extends it with modular components for complex experimental structure, adaptive sampling, real-time computation, and a pipeline that supports replication from source code. It is intended for experiments spanning perception, learning, cultural evolution, collective intelligence, and human–AI interaction.

At full scale, PsyNet supports a set of core capabilities that together function as an “online human behavior lab”: reusable experiment components, automated recruitment and payment workflows, robust data storage, end-to-end reproducibility, privacy and security, support for diverse recruiting, automated data quality assurance, real-time monitoring, and integration with cloud computation.

This infrastructure makes it possible to run experiments that combine: (i) large-scale recruitment, (ii) structured multi-stage designs, (iii) interactive or networked participant dynamics, and (iv) computational models in the loop—without requiring bespoke engineering for each new study.

What PsyNet enables

- Massive interactive experiments involving hundreds or thousands of participants.

- Modular building blocks for multi-stage designs, networks, and feedback loops.

- Integration of adaptive algorithms and model-based experimentation (e.g., sampling from subjective distributions).

- Automated infrastructure for recruitment, monitoring, compensation, storage, and reproducible deployment.

- Experiments that scale across languages, sites, and populations, including cross-cultural and developmental research.

Why it matters

PsyNet helps make behavioral science more scalable, interactive, reproducible, and globally inclusive—supporting research on perception, learning, cultural transmission, collective intelligence, governance, and human–AI systems in controlled, ethical, and computationally powerful online environments.

Related Publications

2020

-

-

Gibbs sampling with peopleIn Advances in Neural Information Processing Systems, 2020

-

Cultural familiarity and musical expertise impact the pleasantness of consonance/dissonance but not its perceived tensionScientific Reports, 2020

2018

2017

-

How social information can improve estimation accuracy in human groupsProceedings of the National Academy of Sciences, 2017

-

Locally noisy autonomous agents improve global human coordination in network experimentsNature, 2017

2016

-

Peer review and competition in the Art Exhibition GameProceedings of the National Academy of Sciences, 2016

2015

-

The spontaneous emergence of conventions: An experimental study of cultural evolutionProceedings of the National Academy of Sciences, 2015

2013

2010

2008

-

Markov chain Monte Carlo with peopleIn Advances in Neural Information Processing Systems, 2008

2006

-

Experimental study of inequality and unpredictability in an artificial cultural marketScience, 2006